October 8, 2025 Web Desk

Artificial Intelligence (AI) has seamlessly integrated into our daily lives, becoming an indispensable tool for quick access to information and content. Its popularity stems from its ability to not only aggregate human knowledge but also generate novel ideas and concepts independently.

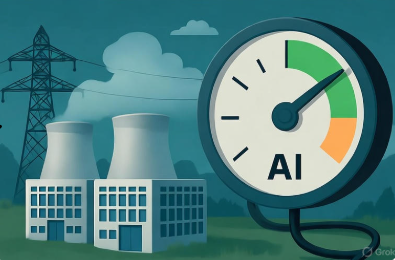

While many harness AI for educational and creative pursuits, others exploit it for commercial or even nefarious purposes. On the surface, AI simplifies our existence, but beneath this convenience lies a steep, often overlooked cost: energy consumption. Training and operating generative AI models demands vast amounts of electricity, raising alarms about sustainability.

Training an AI model is akin to feeding it an ocean of data—the more complex and voluminous the dataset, the greater the energy required. Studies reveal that a single query on ChatGPT consumes nearly 10 times more energy than a traditional Google search. In fact, generating just one image with a generative AI model uses as much power as charging a smartphone fully.

These models rely on massive data centers equipped with high-performance processors and intensive cooling systems that run nonstop. The result? Soaring electricity demands that dwarf human energy use. For context, the human brain—a marvel of biological computing—operates on a mere 12-20 watts, equivalent to a dim lightbulb. In stark contrast, simulating a fraction of the brain’s capabilities with current AI hardware could require up to 2.7 billion watts, making AI millions to billions of times less efficient. A single ChatGPT query alone guzzles 2.9 watt-hours, compared to 0.3 for a Google search.

Globally, data centers consumed around 460 terawatt-hours in 2022, with AI accounting for about 8% (or 4.5 gigawatts) of that in 2023. Projections paint a concerning picture: By 2028, AI could drive data center electricity use to 14-18.7 gigawatts worldwide, representing up to 20% of total consumption and potentially tripling the U.S. share from 4.4% to 12%. This surge could emit as much CO2 as driving 300 billion miles annually.

The environmental toll is undeniable, but hope lies in innovation. Researchers are developing energy-thrifty models like DeepSeek, a Chinese AI breakthrough that trains high-performing systems using 50-75% less power than rivals like NVIDIA GPUs—achieved with just 2,000 chips versus 16,000+ for U.S. counterparts. Such advancements, inspired by the brain’s efficiency (e.g., “Super-Turing AI”), could slash costs and emissions, paving the way for greener AI.

As AI evolves, curbing its energy appetite through efficient algorithms and renewable integration is crucial. Without action, this “silent crisis” could overload grids and exacerbate climate change—but with smart design, AI might yet become a sustainable ally.